Robot

Four robotic tele-surgeries were successfully performed at Delhi’s Sir Ganga Ram Hospital, with surgeons remotely operating from Vapi, Gujarat, using the Mizzo Endo 4000 system. High-speed networks enabled real-time transmission of surgical commands and visuals

Updated 10 hours ago

Earlier this year, the company showcased is Lingxi X2 robot cycling in an open space, and also the robot performing the notoriously difficult Webster flip — a gymnastics move that involves a forward somersault with a back-leg take-off

Updated 11 hours ago

Cardi B falls down while dancing with robot ahead of Super Bowl

Updated 2 days ago

US researchers develop smart skin that changes shape and texture

Updated 5 days ago

Chinese firm MirrorMe unveils worlds fastest running humanoid robot

Updated 6 days ago

Humanoid robot Moya debuts with lifelike movement and expressions

Updated 7 days ago

ADVERTISEMENT

Robot welders to enter live industry tests in Louisiana

Updated 9 days ago

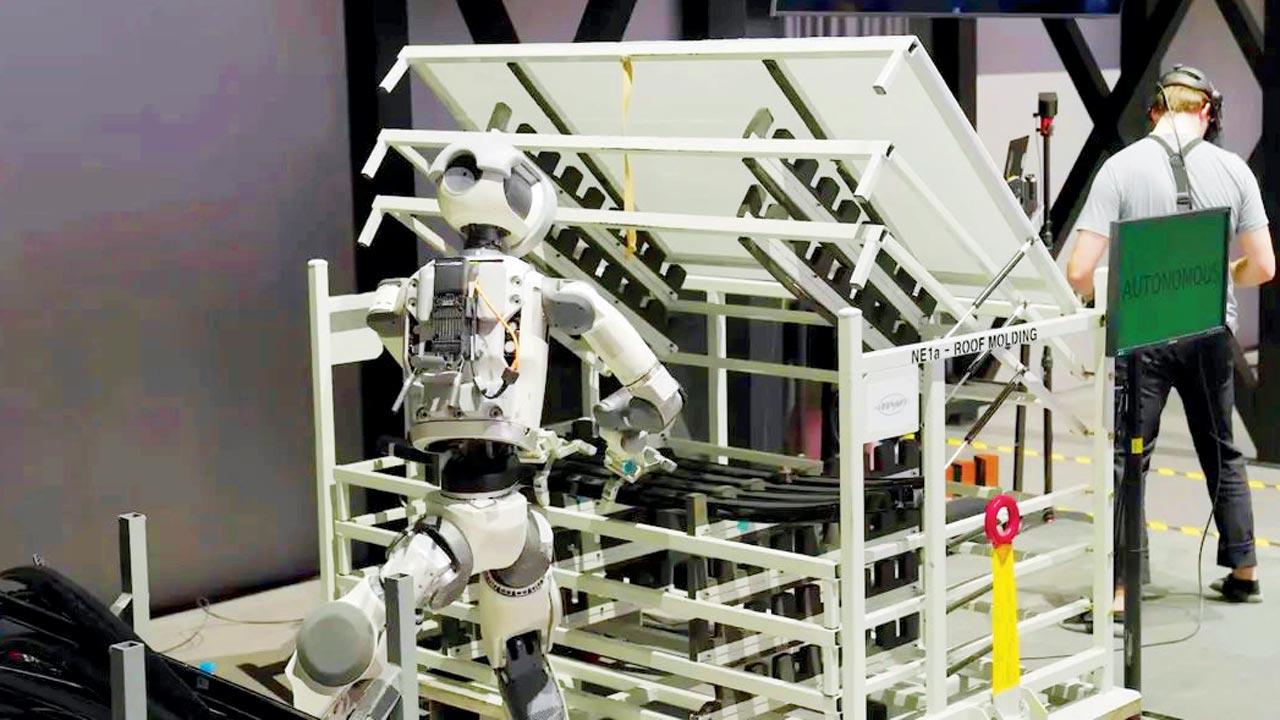

Hyundai begins testing humanoid robots at US manufacturing plants

Updated 12 days ago

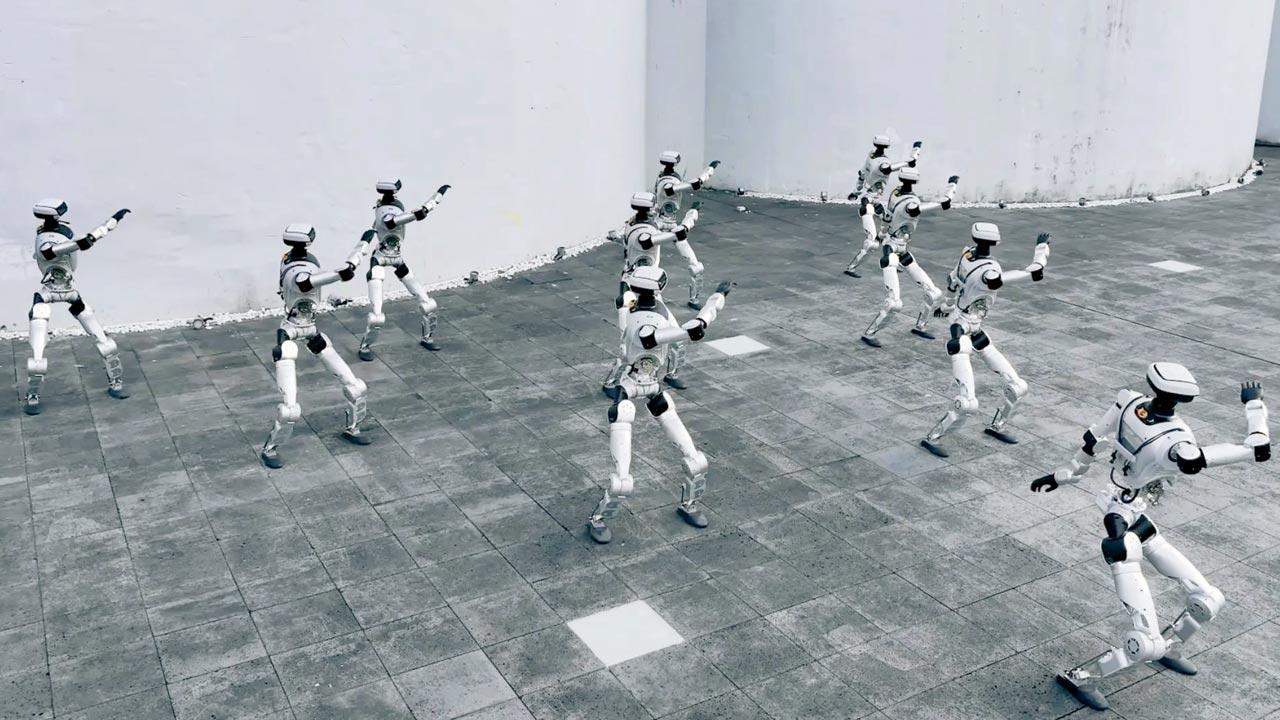

Chinese firm showcases autonomous deployment of 18 humanoid robots

Updated 13 days ago

BMC school hosts first-ever drone and robotics workshop for students

Updated 15 days ago